Educational Impact Model for the NAS Milestones

The GME Today® Educational Impact Model (EIM) is the primary assessment methodology used to guide and develop GME Today®'s online educational courses to assist residents and residency programs in achieving certain NAS Milestones. The Educational Impact Model enables GME Today® coursework to measurably quantify the knowledge, competence and performance gained by residents who participate in GME Today® courses related to milestones in the NAS. Interactive educational courses use a variety of formats, including video case vignettes, learner feedback, and survey instruments to assist residents in self-directed learning and progress toward achievable goals. The results of residents’ progress are measured and tracked by the GME Today® software to provide program directors and coordinators with documentation of progress within the milestones.

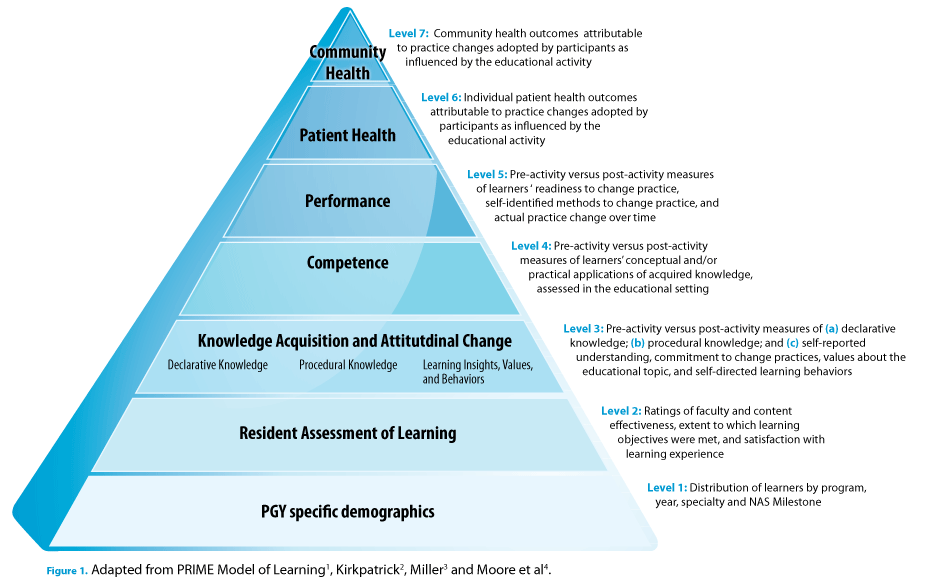

The GME Today® Educational Impact Model is adapted from the PRIME® Model of Learning1, an internationally recognized and utilized learning model largely shaped by conceptual frameworks developed by Donald Kirkpatrick2, George Miller3, and Donald Moore and colleagues.4 (Figure 1.) The PRIME® Model of Learning1 has been adopted by several European countries as the educational impact model to develop information and communication among technology-enhanced simulation training, such as teaching basic surgical skills to novice practitioners.5 PRIME® is the developer of the GME Today® residency coursework. The adoption of its learning model in Europe supports a policy roadmap that can revolutionize physician training and guides its impact assessment model to measure training effectiveness and indicators for public health priorities.6

This learning model shapes the Educational Impact Model of the new GME Today® residency online training courses to meet the tenets of the Next Accreditation System. The Educational Impact Model incorporates measures to identify the effectiveness of the learning format, recognizing that physicians-in-training learn in different ways. For example, multiple exposures about a given topic are more effective than single exposures.7 Multimedia programs are more effective than single media interventions.8 Interactive and procedural formats are more effective than lecture-based CME activities in helping participants retain information and change practice behaviors.9 Web-based programs are as effective as live, small-group, interactive programs in terms of knowledge, confidence, and practice behaviors.10

The Educational Impact Model also incorporates case vignettes to provide residents with a mechanism to practice incorporating scientific knowledge into clinical practice, thereby improving competence in their clinical reasoning skills. Unlike case studies, which typically involve a longitudinal examination of a single patient (a case) to identify and analyze issues and solutions, case vignettes provide short impressionistic scenes that intend to focus on a specific point of time or point of care in a patient's treatment and management cycle. In 2000, Peabody and colleagues demonstrated that case vignettes (compared to chart review and standardized patients) are a valid and comprehensive method to measure clinical variations and processes of care in actual clinical practice.11 In the GME Today® coursework, case vignettes are applied as an effective learning mechanism to augment Objective Structured Clinical Examinations (OSCE) or standardized patients.

References

- PRIME Education, Inc. PRIME Model of Learning. Educational needs assessments, activity development, and outcomes assessment at PRIME: Applications of established conceptual frameworks and principles of adult learning. Science of CME. 2009. Available at: https://primece.com/science-of-cme/

- Kirkpatrick D. Revisiting Kirkpatrick's four-level model. Training and Development. 1996;50:54-59.

- Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65(9 Suppl):S63-67.

- Moore DE, Green JS, Gallis HA. Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009;29(1):1-15.

- SIMBASE Project: Promotion of ICT-enhanced simulations based learning in healthcare centres. Lifelong Learning. (no longer available)

- SimBase 2-year EU-funded project. Available at: http://www.simbase.co.

- Marinopoulos SS, Dorman T, Ratanawongsa N, et al. Effectiveness of Continuing Medical Education. Rockville, MD: Agency for Healthcare Research and Quality; 2007.

- US Department of Education, Office of Planning, Evaluation, and Policy Development. Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies. Washington, DC: US Dept of Education; 2009.

- Stephens MB, McKenna M, Carrington K. Adult learning models for large-group continuing medical education activities. Fam Med. 2011;43(5):334-337.

- Fordis M, King JE, Ballantyne CM, et al. Comparison of the instructional efficacy of Internet-based CME with live interactive CME workshops: a randomized controlled trial. JAMA. 2005;294:1043–1051.

- Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283(13):1715-1722.